Edition#11 Levels of abstraction

High- vs low-level code, art of statistics, NLP resources, skill acquisition

🖇 Levels of abstraction

When choosing a framework for data science or data viz work, sometimes we need to choose at what level of abstraction to operate.

🔎 High vs Low

At the low levels, you might be coding from scratch. At the high levels, you might be using a plug-and-play tool. Flexibility at one end, and convenience at the other end. Time-consuming in one approach, embracing constraints in another.

For example, in viz one has a plethora of tools. Vega is a higher-level abstraction of D3, Vega-Lite a higher-level abstraction of Vega. And Plot a js library akin to Vega-Lite, while Altair a Python cousin. And at the highest level of all, there are drag-and-drop tools like Tableau, Raw, or Data Illustrator.

The higher the level of abstraction, the more canned the chart types become. Users’ needs in common helped shape some of those higher-level abstractions.

In data science, one might face very similar choices. At the lower level, there're custom algorithms to tweak. At a higher level, existing models/packages/APIs.

🌡️ When to use which

If there are viable quick high-level solutions for garden-variety problems, why not? When the tool does exactly what you want to do, it’s often a good idea not to re-invent the wheels.

Yet the question is: how do you know it is viable? And that concerns factors including familiarity, simplicity, reliability, maintainability, interoperability, compatibility, etc.

🧭 Time saved vs time wasted

How to judge if something is reliable is a craft I started to appreciate not too long ago. Some tools are not so adamant about their rough edges, partly due to the creator's blind spots. Sometimes one needs to consider not just how easy it is to use this tool, but how suitable it is in combination with other existing tools, given current and future context. The lesson is not to blindly use some package (even though they may be popular) without knowing its limitations. Otherwise, the time saved could be time wasted.

🔌 Custom made

Another scenario where a high-level solution might not be viable is that some problems take more than a cookie-cutter.

Making a chart with one line of code is awesome until you realize that doesn't communicate the nuance in the data. Fast iteration with autoML is awesome, but it won't solve the problem people are struggling to solve with manual ML.

However nicely packaged a pre-baked solution is, it cannot tackle the problem its creators didn’t set out to tackle in the first place.

🕳️ Multi-level

In viz one has the option to switch levels because narratives can be non-linear and multi-dimensional. One has the option to present multiple answers to multiple questions. Susie Lu called it 'traveling' between levels.

Going a bit lower gives the blessing of flexibility. Going too much lower slows you down.

🧉 Worth viewing

This is a section on free and open resources for endless learning.

This is a section on curious finds.

Good aggregator to get an overview of NLP data, tasks, models, hot topics and more. Despite a seemingly quizzical choice of background color, overall it is rather handy.

A list of colab notebooks on different use cases, 300+ and counting at the moment.

🔦 Kaleidoscope

Spirographs

The spiro package in R by W. Joel Schneider offers a delightful bunch of drawing capabilities with svg output. Here are a few examples of something I made using it.

📚 Recent Reads

In this last but second section of this edition I share about the books, papers, or blogs on data visualization, data science, or communication. This time I decided to make some diagrams to illustrate what the book is about.

The Art of Statistics by David Spiegelhalter

I view this as a statistical communication book, as it covers fundamental and practical stats concepts in an accessible manner through storytelling. In the author’s own words, this book tries to ‘celebrate good practice in the art and science of learning from data’.

One notion I like about the book is statistical imagination, the ability to form hypotheses on possible causes from all sorts of perspectives and devise different experimental designs to test them, e.g. through prospective cohort, retrospective cohort, case-control, etc.

It also portraits stats as a cyclical endeavor

Conclusions from one problem-solving cycle raise more questions, and so the cycle starts over again.

Think Bayes: Bayesian Statistics in Python by Allen B. Downey

The book is useful for building up intuitions about Bayesian thinking though incremental examples.

🍸 Jamais Vu

‘Never seen before’ - the opposite of déjà vu. This section contains either concepts new to me or terms I started to view in a new light. All concepts are fluid. Best served with a pinch of salt.

Dreyfus model of skill acquisition

The Dreyfus model constructs a ladder of skillfulness including novice, competence, proficiency, expertise, and eventually, mastery. This journey goes from following the rules to transcend the rules, and develop intuition from deep understanding.

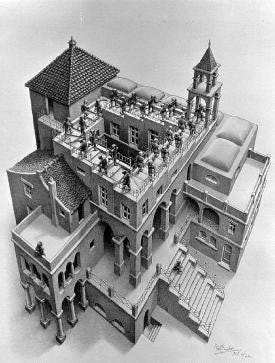

The author used chess as an example to explain, though chess is a relatively self-contained domain. In fields such as data science, people are often required to master multiple domains. Therefore it is more common to observe different levels of skills across different domains - one could be a master in one while a novice in another. If the Dreyfus model is like a ladder, the skill levels in data science may feel more like Escher’s stairwell.

The author of Atomic Habits James Clear has this suggestion on where to focus:

For the beginner, execution.

For the intermediate, strategy.

For the expert, mindset.

Metacognition

A term coined by psychologist John H. Flavell, metacognition is the thinking about thinking. It describes the awareness of one’s thought process. It helps us recognize biases and illusions.

Hilbert’s paradox of Grand Hotels

A thought experiment where a fully occupied hotel with countably finite rooms can always accommodate additional infinite rooms